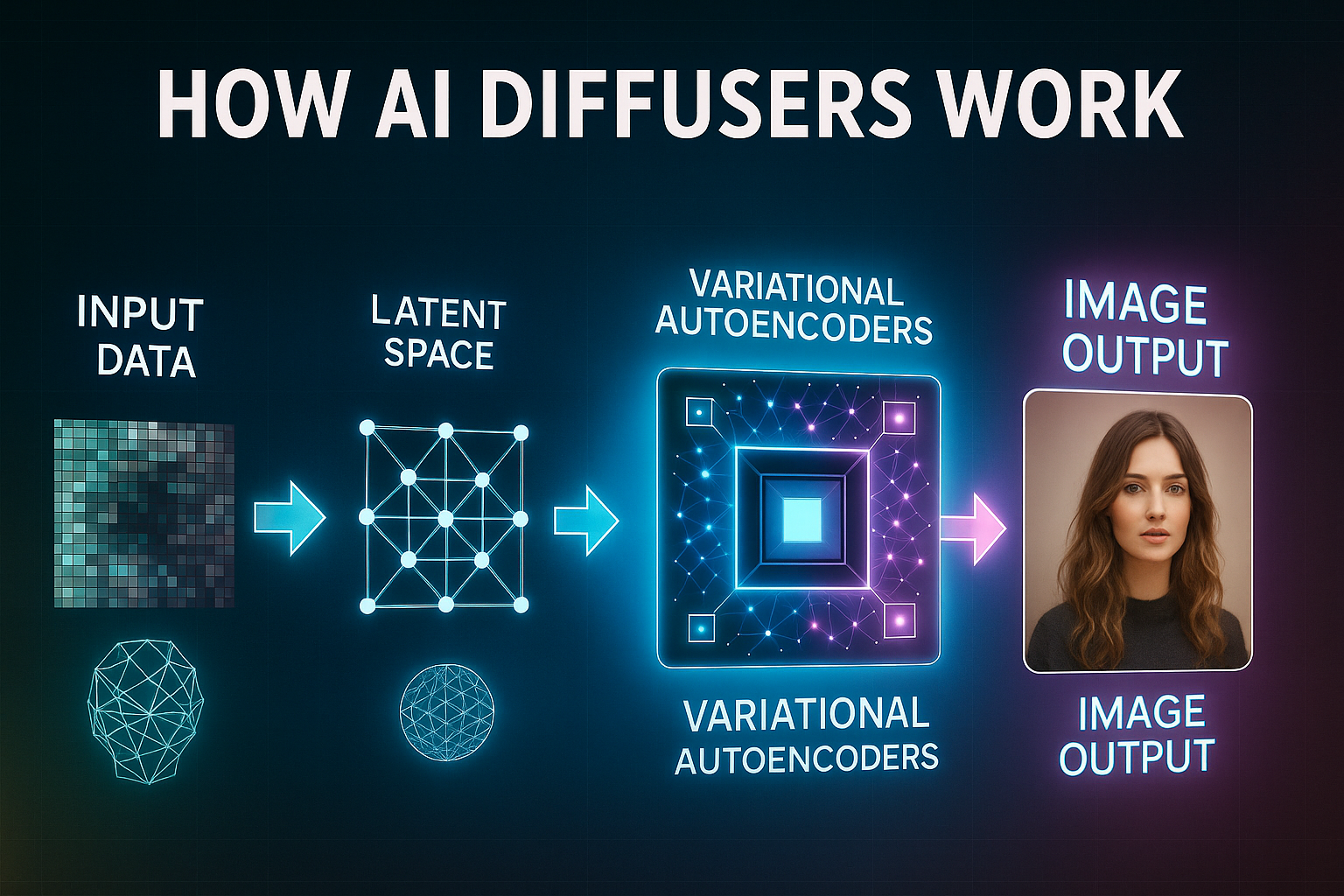

The Diffusers library by Hugging Face has become the heart of creative AI projects everywhere. Originally designed to make AI image generation accessible, Diffusers now lets users tap into a wide range of diffusion models for everything from stunning AI art to advanced text-to-video animation—all from one powerful Python toolkit.

So how does Diffusers work, who’s using it, and what makes it such a game-changer for both beginners and AI power users? Let’s break it all down.

What Is Diffusers?

Diffusers is an open-source Python library created by Hugging Face that lets you easily run, fine-tune, and experiment with all kinds of diffusion-based models. It supports tasks like:

- Text-to-image: Turn prompts into high-quality artwork or photos.

- Image-to-image: Transform, stylize, inpaint, or outpaint existing images.

- Text-to-video & Image-to-video: Generate short video clips from prompts or sequences.

- Other creative uses: Audio generation, 3D synthesis, and multimodal AI workflows.

You can access hundreds of models—Stable Diffusion, SDXL, DreamShaper, AnimateDiff, Stable Video Diffusion, and more—directly from the Hugging Face Model Hub.

Why Advanced Users Love Diffusers

1. Powerful and Flexible Workflows

- Build custom image and video pipelines with Python scripting.

- Automate batch generations or create advanced editing workflows.

- Mix and match models, samplers, and settings for unique results.

2. Huge Model Variety

- Supports top models for images (Stable Diffusion, SDXL, DreamShaper, etc.).

- Works with popular video tools (Stable Video Diffusion, AnimateDiff, ModelScope).

- Ready for multimodal: combine text, image, and video in one flow.

3. Easy Model Download and Management

- Instantly pull models from Hugging Face or upload your own.

- Supports LoRA, custom embeddings, and fine-tuning for personal style.

4. Integration & Automation

- Link Diffusers with other AI tools (like Oobabooga WebUI, ComfyUI, or custom UIs).

- Run scripts locally or on cloud servers for large projects.

- Batch process for mass content creation, art projects, or research.

5. Custom Training & Research

- Fine-tune models on private datasets for specialized art or effects.

- Experiment with new architectures and techniques before public release.

Where to Find Models for Diffusers

- Hugging Face Model Hub:

- Massive collection for text-to-image (Stable Diffusion, SDXL), image-to-image, and video generation (Stable Video Diffusion, AnimateDiff, ModelScope).

- CivitAI:

- Community-uploaded models, LoRAs, styles, and add-ons—download compatible files for Diffusers.

- Official GitHub Repositories:

- For experimental and early-access models.

Important Points to Remember

- Open Source and Community Driven:

- Get support, scripts, and updates from Hugging Face and a huge online community.

- Script-Based (Not a GUI):

- Best for users with some Python experience—if you want point-and-click, try a WebUI like ComfyUI, but advanced control is with Diffusers.

- Endless Creative Control:

- From simple prompts to complex animated workflows, Diffusers unlocks the full power of generative AI—just bring your ideas.

Final Thoughts

Diffusers is the backbone for modern AI image and video generation. Whether you want to make AI art, generate stunning animations, or experiment with custom models and creative workflows, Diffusers gives you professional power—without the closed systems or strict limits of online-only tools.

For anyone serious about exploring, automating, or scaling generative AI, Diffusers is the toolkit you’ll want in your arsenal.